Map Reduce is basically a programming model that is used to implement parallelism when processing algorithms. It can be basically be viewed as a three stage process : map, shuffle and Reduce. One can think of map reduce to be analogous to the merge sort or the quick sort operations where we have got a splitting operation and then a joining operation.

Map Reduce is a concept totally inspired by the functional programming languages. Well don’t be scared, one doesn’t need to understand functional programming language to read this. Functional programming languages are where we can pass functions as arguments to another function. Lets see all this using an example of Map and Reduce.

Suppose we want to double all numbers stored in an array. A simple program would be:

int array=[1,2,3];

for(int i=0;i<array.length;i++)

array[i]=array[i]*2;

An Hadoop Map implementation of this simple code would be :

map({function(x) {return x*2;},array)

Alright you may think agreed that this saves some lines of codes, but is this the only use. Well, here we have an array containing just 3 elements, imagine a situation in which you have billions of such elements in an array to be processed and you have thousands of systems, then each system can just execute this map operation in parallel and thus save a lot of time.

A Reduce operation is also similar as map operation. Let’s see a very easy example. Now that you have doubled every element using the map operation, you want to add-up all these values. What do you do? You can very well a small code:

int sum=0;

for(int i=0;i<array.length,i++)

s=s+array[i];

The same piece of code can be written as

Reduce(function(a,b) return{a+b;},a,0)

This function says that a and b can be added and the result is stored in a. The initial value is signified by 0. Now that we have seen what map and reduce functions are it is time we see how these functions are implemented in Hadoop. In addition to just sum or reduce functions we have an all important shuffle function about which we will discuss in detail in another post.

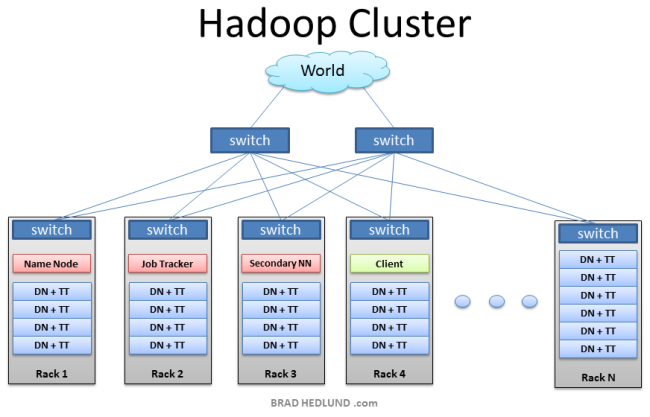

Lets look into the aspect what really happens when a Map Reduce job is submitted to a haddop machine. There are 8 steps that take place:

1. The Map Reduce code we write sets up a job client which runs the job.

2. This job client creates a job ID and sends it to a job tracker.

3. Next the job client also sets up a shared file system that has all the job resources required like the jar files that we have written and need to be executed.

4. Once all the required files are available in the shared file system which is usually HDFS, the job client can tell the job tracker that it can start the job.

5. The job tracker then does its own calculations so that it can send input splits( code snippets) to each mapper process to increase efficiency and gain throughput. The input splits are obtained from the HDFS shared file system.

6. Remember from the previous post that the task tracker continuously sends in signals to job tracker telling about its status and seeking any new jobs.

7. Now that the job tracker has jobs, it allocates either a map task or a reduce task to the task tracker which in-turn gets its share of input splits from the HDFS shared file system.

8. After receiving these input splits, a child process is started that may have a JVM to process the files.

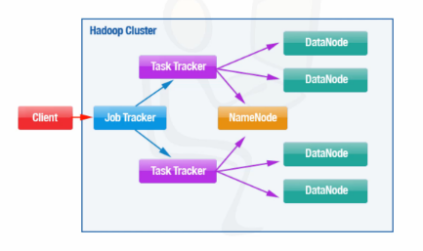

The Sequence flow of actions can be better understood seeing this diagram: